Vision

2024-Sep-22

What's your startup about? That's not a question any founder is expected to fumble. Yet here I am, three years since founding and countless pivots, pondering this question more than I can remember.

When we started susuROBO, the biggest pain points we saw on the way to conversational interaction were natural language understanding, generation, and dialog management. Since then, even this neat modular view of NLP has been blown up by LLMs.

Since their popularization, LLMs have sped up the pace of progress in conversational AI to the point where the list of biggest pain points can change weekly. With the aim moving this fast, we need a constant reminder of our North Star, a vision of the ultimate goal. This vision is not a concrete product or something that can be achieved in a predictable timeline. A vision can guide us to make the right choices at many forks along the product and business development routes.

Our vision is to enable everyone to access and benefit from AI through advancements in user interface.

Why is UI important for AI? In general, in order to successfully interact with technology, users must have some degree of a mental model of that technology. The better the UI, the less effort it takes to build such a mental model.

When interacting with an LLM via text, having the right mental model about how an LLM works allows users to craft their input in order to get better responses. Calling this prompt engineering highlights the complexity and discourages less determined among us from trying.

Conversing with AI via voice adds some extra layers to the mental model users have to keep in mind:

- Can I interrupt or talk over it?

- How long should I wait for the response?

- If there is a misunderstanding, how should I adjust my wording, pronunciation, speed, etc.?

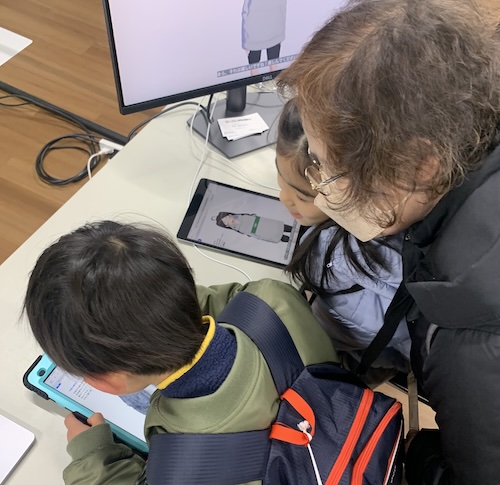

Just like with prompt engineering, there are experts in conversing with AI via voice (have you seen Siri whisperers who are able to do their email while driving?). Unfortunately, most of us are not. In fact, our research shows, it is especially difficult for the young and the elderly. Incidentally, these are the same groups of users who may benefit from voice interaction the most, since for many of them text input may be even less practical.

Humans solve these problems by using filler words ("uh" and "uhm") and non-verbal (head nods and eye gaze, among many others) behaviors. It's not a big stretch to think (as we do) that replicating some of these capabilities in conversational AI systems will increase accessibility, broaden acceptance, and allow more people to benefit from the AI revolution.

In the following posts, we will delve into details about how exactly we go about solving these issues. Let the conversation begin!