Are realtime audio-to-audio models ready to replace your VAD→STT→LLM→TTS pipeline?

2025-Sep-04

As context windows grow and models get better at reading intent, users are shifting to vibe interaction: pile on context, describe the task, and let the model do the magic. Increasingly, the first response works—which reinforces the pattern. In that world, guiding the model with heavy chain-of-thought orchestration is often unnecessary or even harmful as you can bias the reasoning toward your own wrong assumptions.

Similarly, in long-form interaction use cases (entertainment, therapy, education), where success equals sustained engagement, the days of rigid orchestration VAD → STT → LLM → TTS feel numbered. Humans coordinate their turns using timing, tone, and non-verbal cues; realtime audio-to-audio models trained on those signals should handle turn-taking more naturally than a waterfall pipeline, even a low-latency one as Pipecat.

There's a catch, though: like physics engines in games, they still need guardrails and flow control.

Realtime audio-to-audio LLMs and game physics engines

In the 8-bit era, you could script interactions between each and every object:

if (ball.position.y near floor) reverse ball.velocity.y

Likewise, a rule-based dialog system might say:

if (user greets) reply with a greeting.

As realism rose, hand-authoring every interaction became unwieldy. Game devs introduced a physics layer that makes millions of micro-interactions look right—whether it's a Mario Kart's bump or Norman Reedus's Sam leaving crisp footprints in deep snow. Similarly, LLMs now do the conversational heavy lifting. New realtime APIs even encourage entrusting to them the whole flow of the discourse.

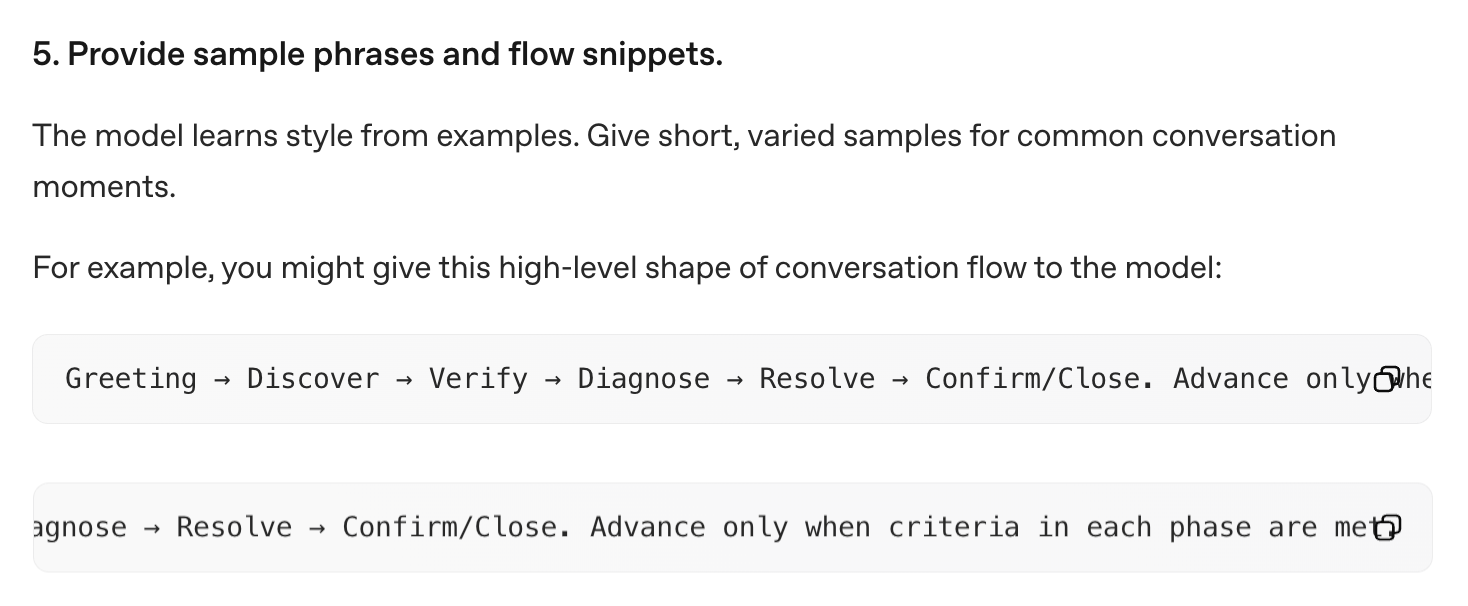

"Prompt and keep your fingers crossed" style of dialog management from OpenAI Realtime API documentation

But, just like game physics engines, LLMs alone can't handle longer interaction flow. Over-reliance on physics engines produced classic glitches like tunneling through walls or debris soft-locking doors. Pure LLM flow has analogs: derailments, looping, brittle prompts.

So we borrow the game playbook: add a supervision layer on top of the LLM's "physics."

Two types of supervision

1. Reactive (watchdogs)

In Games

Kill-planes (world boundaries) & timeouts

In Conversational AI

if dialog drifts N seconds/turns → snap back with a forced, short clarifier.

In Games

Flow aborts

In Conversational AI

monitor both user STT transcription and LLM output streams for hard requirements and deterministic handling (specific topics or phrases). On trigger → cut LLM/TTS and inject an appropriate output.

2. Proactive (guardrails)

In Games

Cutscene inserts

In Conversational AI

- short preauthored audio beats for handoffs, disclosures, or sensitive transitions (deterministic wording, prosody).

- Closed-loop questions for tight question-answering interactions

- Pre-processing of user transcriptions for precise conditions like:

The user pronounced 80% of words in the reference phrase correctly.

In Games

Scene transitions

In Conversational AI

Deterministic flow transitions when long and/or short context conditions are satisfied.

Example cutscene of grabbing a rucksack in Death Stranding 2

To summarize the analogy:

| Games | Conversational AI | |

|---|---|---|

| Temporal unit | Animation frame | Conversation turn |

| Simple/early | Rule-based physics applied at every animation frame | Turn-by-turn control by intervening into VAD-STT-LLM-TTS loop |

| Complex/modern | Physics engine with guardrails and external logic | Realtime LLM with guardrails and external logic |

| Reactive supervision (watchdogs) | Kill-planes, timeouts | Dialog drift/loop classifiers, topic resets, flow aborts |

| Proactive supervision (rails) | Cutscene animation sequences with limited interactivity Scene transitions | Pre-recorded outputs, closed-loop questions Flow transitions |

What today's realtime APIs give you (and don't)

| OpenAI realtime | Gemini Live | |

|---|---|---|

| User transcription intercept before the model's response |

Enabled by session settings: "input_audio_transcription": { "model": "whisper-1" }

Note: Transcription runs asynchronously with response creation, so this event can come before or after the response events. Realtime API models accept audio natively, and thus input transcription is a separate process run on a separate speech recognition model such as whisper-1. Thus the transcript can diverge somewhat from the model's interpretation, and should be treated as a rough guide. (Microsoft doc) |

Received as part of the Response message for the BidiGenerateContent call: serverContent.inputTranscription

Note: The transcription is sent independently of the other server messages and there is no guaranteed ordering. (Google doc) |

| Model output transcription intercept before the model's audio response |

Response.text.delta

Response.audio_transcript.delta

Note: Timing not clear. |

server_content.output_transcription

Note: The transcription is independent to the model turn which means it doesn't imply any ordering between transcription and model turn. (Google doc) |

| On-the-fly context update | Session.update (all except voice) | generationConfig (all except model) |

To recap, if the supervision requires interception of input or output transcript strictly before the output audio started generation, realtime models may not be a good fit. In the future posts we are going to propose workarounds for a few typical patterns.